Many organizations are blind to delays. We are so focused on the time we actually spend working on activities that we ignore the waiting time between them. This is a huge problem because usually the waiting time exceeds the working time by far. As a result the delivery of value to our customers is a lot slower than potentially needed. This is an adaptiveness anti-patterns because to be adaptive we need faster lead times so we can measure the results of our actions and assumptions faster.

What can you expect from this article?

- An in-depth analyses of the typical causes and consequences of delays in organizations.

- Clear ways to recognize the pattern of delays blindness in your organization.

Favoring processing time over lead time

The Valuestream

A value stream is simply all the activities and people involved in delivering value to the customer from order to cash, or concept to cash. Value streams typically match products, product lines, services, or customer segments.

A value stream

Let’s consider a typical value stream in your organization. Take a moment to identify one, and then quickly sketch all the activities involved from the order to the final cash receipt. Or if you are doing bespoke projects, from concept to cash receipt. If you are not selling a physical product but you are f.e. selling monthly subscriptions to your software product, define the process of adding a feature to the software. It doesn’t have to be perfect or complete. It is just a quick sketch to get us going. It might help to think of all departments involved and their contribution to the process.

Now do a rough estimation of the total time involved from the start of the value stream to the end. Again, it doesn’t have to be a mathematical calculation, your first thoughts are good enough. Now of course I don’t know what the actual lead time for your value stream is, but I am willing to take a bet it is actually substantially higher than you just estimated. How do I know? Because it usually is when we do this exercise and then measure the real lead time. In most organizations the actual lead time of a value stream is substantially higher than what people expect it to be.

Processing time vs Lead time

When I asked you to quickly estimate the lead time of your value stream example chances are that you did a rough estimate of each of the activities involved. The sum of the processing time of each activity roughly equals the total lead time. Sounds reasonable right? Unfortunately, it is quite wrong. But don’t feel bad: most people make the same mistake.

Measuring processing time

We tend to have a bias towards processing time over lead time

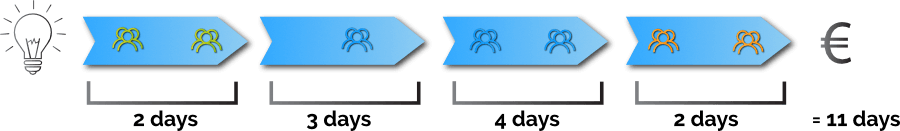

Our focus on efficiency causes us to consider the time spend working on the individual activities. We are actually blind for the delays between the activities. But as it turns out most of the total time in a typical value stream is spend waiting. Waiting for approval, waiting for feedback, waiting for others to do their part before you can continue. Lead time includes processing time as well as waiting time. And the alarming part is that delays (waiting time) actually account for over 80% of the lead time in a typical situation!

Here’s a typical value map that depicts both processing time and lead time. We can see the enormous amount of time wasted in waiting.

Value stream map

As we are typically blind for the delays in our processes, we tend to miss the fact that delays actually cost money. It is not just a later delivery of the value. The cost involved in delays, or short Cost of Delay, can be seen as lost opportunity cost. We could simply have made more money.

Lean – removing waste – a word of caution

Lean Thinking is all about removing waste and as such can really be of help in analyzing the source of delays and removing some of them. Especially methods like Lean Six Sigma have a strong focus on this.

While there is nothing wrong with Lean Six Sigma in general, the problem is it is usually used in isolation, meaning Lean Six Sigma black belts come in with the sole assignment of making processes more efficient. Period. The risk of this is overdoing things like I see a lot in lean projects. Efficiency becomes the goal, instead of a means to and end. While drinking a lot water is definitely healthy, there is even a thing like drinking too much water which can lead to water intoxication which is quite dangerous. Too much Lean Six Sigma, too much focus on efficiency is quite dangerous in circumstances where we need adaptiveness. In complex circumstances we need to discover the future rather than plan it which is not a proces build for efficiency. In complexity we even need a certain degree of inefficiency to be effective.

So my pain point of this article is to warn about the danger of being blind to delays, not to squeeze the last drop of buffer and creativity out of your processes in an attempt to remove all possible delays.

You need to find a balance.

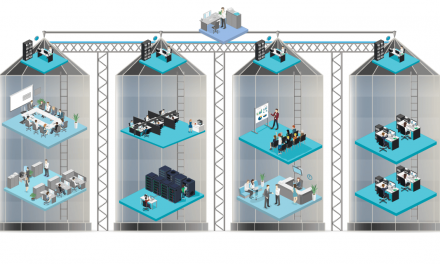

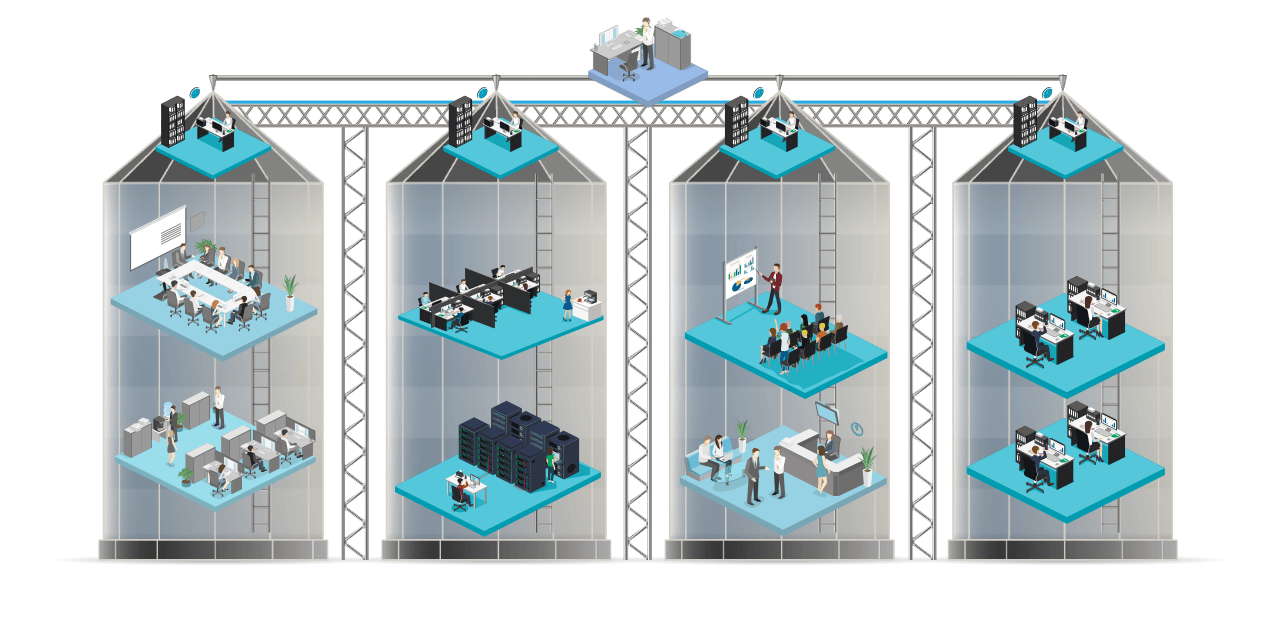

The curse of the silos

Much of the blindness for delays is caused by the silo structure almost all organizations have. The value stream is a horizontal process, but the silo structure of the organization is a vertical one that cuts right through it and creates artificial borders.

The fact that a vertical department is responsible for an activity in the value stream leads to local optimization. We try to organize the work the best we can for our purposes to meet our goals and our KPI. But that easily leads to delays upstream. For example someone needing some materials needs to follow a procedure that is efficient for the party processing the request. But that same procedure probably leads to a delay for the one needing the materials. What is efficient for one, leads to waiting time for the other.

What’s even worse is a specific type of delay caused by batching which we will discuss in depth in the next section. You can imagine that for the request processing party it is efficient to batch requests and then process them all at once vs processing them as they come in. But this leads to an extra average delay for the person who filed the request.

The silo structure leads to local optimization and delays

The silo structure also includes departments that are supposed to be facilitating business departments like finance, procurement, legal, and facility management. But the fact that these departments have their own KPIs in stead of the goal to help business departments meet their KPIs often leads to sub-optimal solutions for the business departments which cause…further delays.

Curse of the silos

If you want to know more about the problems of the solo structure then read our series on The curse of the silos. It’s an anti-pattern of its own!

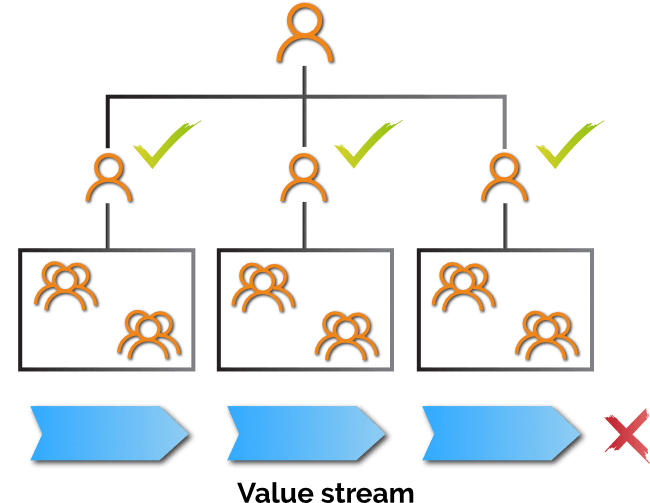

Centralized decision-making

Delays are often caused by permissions needed, especially when these cross the border of a silo. They are also often caused by waiting for information. We tend to be blind for the delays we cause because the burden is shifted to another part of the value stream that is under the control of another silo. We don’t see them because our KPIs don’t measure them.

The effect of the silo structure is even reinforced by the hierarchal structure. That the people who are doing the work often are not the ones allowed to decide does not help in the work’s speed. Waiting for permission within a silo leads to a lot of delays. But it gets worse when we cross silos. If worker A in department X needs something from worker B in department Z, the fastest and most obvious way would be for A to talk to B. But all too often worker A will talk to his boss instead who will address the issue towards his equal in department Z who will then talk to worker B. It sounds silly when you describe it but you will probably recognize and admit you do see it happen a lot. And maybe, just maybe, you have done it yourself too. Hey, there are advantages to having a manager as well, like being able to delegate work to them…

High utilization and batching

There is a deeply entrenched belief that to make the most out of our employees we must carefully plan their work in order to fully optimize the time they can spend working on their tasks. The same idea applies to expensive machines: since they are expensive we better have them running as much as possible, right? Many task/project-oriented organizations have a dedicated planning department whose job it is to make the most efficient use of our human resources. Home care organizations are plagued by this: a nurse’s time is sometimes planned to the minute. The time each activity should take, the time spend traveling between patients, the most efficient route between patients. It actually is a spectacular and utterly demotivating deep dive back into the past to the times of Fredrick Taylor’s Scientific Management. Why doesn’t it work? Because the work of a nurse is much more complex in nature than can be handled by a central planning department and rigid procedures. So all exceptions to the rule still need to be handled by the nurse herself, making the strict timelines a burden instead of an aid. If you can find a better way to kill the performance and motivation of highly skilled people who are intrinsically motivated by nature to help others, please let me know.

High Utilization

This concept is called utilization. And in many organizations we have high utilization. Unfortunately, the idea behind it does not even work for the expensive machine we mentioned, but even less so for human beings. I sometimes ask “How does a highway look like with 100% utilization?” The answer is: “Like a giant parking lot.” Now I fear the day that this is no longer true and connected auto-pilot systems in our cars create a train-like system of cars no longer under our control. But until that day I can use this example. Everybody understands that high utilization on a highway leads to traffic jams which leads you to arriving late. But even worse, variety goes up too when utilization is high, meaning it gets harder to predict when you will arrive at your destination as traffic jams increase. Now why does it seems so difficult for business leaders to transfer this notion to their organizations and accept the fact that high utilization leads to longer lead times and higher variability. It makes things worse!

“High utilization leads to longer lead times and higher variability”

Traffic jams lead to higher lead times and variability

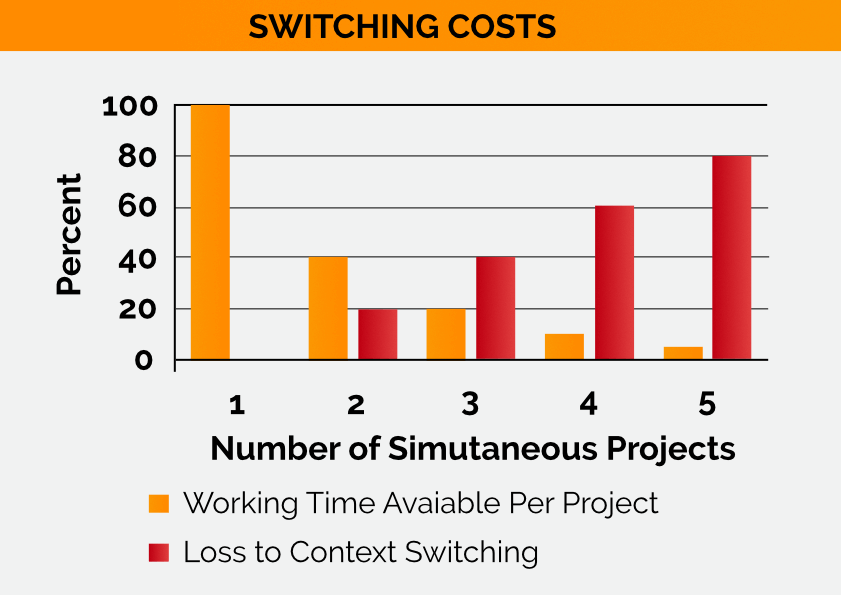

Switching costs

We talked about delays in the value stream activities. Many people who I explain that concept too argue “Yeah, but it is not like those people will actually be idle waiting. They will do other work in the meantime.” Exactly! Which makes things worse! High utilization for human beings is even more damaging than it is to machines because of context-switching costs. It is almost impossible in our modern economies to have high utilization without multi-tasking. Except for routine factory work, perhaps. People cannot multitask. No, even woman can’t. Sorry. Our brains are not capable of multitasking like computers are. When we have to perform multiple tasks at the same time, we constantly switch between them. And while woman might be better at this, it is still an expensive process. You might be able to listen to music in the background while reading your mail because these tasks use different cognitive functions of the brain, but for most tasks it just does not work. The more complex the tasks, the higher the cost of context switching1. The costs of switching is almost always underestimated. We seem to be fairly capable of switching between up to two tasks. Two is also efficient because if you get stuck at one task for a moment, you can still progress with the other. But after that switching costs quickly go up2.

Well, to be fair our brains do multi-task, but at a subconscious level. This doesn’t help us with the conscious tasks we have to perform.

There is actually a third disadvantage to high utilization: it leads to more mistakes. You probably recognize this yourself. In an effort to regain your focus when you switch tasks it is easy to miss something and make a mistake.

So, people involved might be very busy working on several tasks at the same time to fill in the waiting time between activities,. We feel we make good use of everybodies time. But now every individual task takes longer to complete.

The drawbacks of large batches

Individual multi-tasking because of high utilization is a form of batching. We perform work in batches instead of starting and finishing them one by one. This gets worse when we have to work together in teams or across the value stream. As long as I can start and finish the entire task on my own, I am the only one to suffer from batching. But as soon as others depend on me to finish a task before they can start one themselves things get worse. In our value stream context it is easy to find examples of batching: any type of requests processing is prone to batching: Budget allocation, architecture reviews, project approval, facility requests…these are all examples where you can find the processing party to batch work because they feel it is more efficient. But is not. At least not for the people waiting for the request to be processed. Because high batches lead to longer lead times for the individual request. So while it might be convenient for the people processing the request, each individual request takes longer to process, increasing delays in the value stream. This is also explained by Little’s Law. No, it is not a tiny law, it was coined by Professor Little.

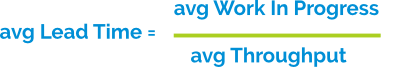

Little’s Law

Little’s Law can be stated as: average Lead Time = average Work in Progress divided by average Throughput. Work in Progress is the current batch of work you are working on at the same time. Throughput is the rate at which you can are able to complete work. Now let’s say we are working on 12 items at the same time: WIP = 12. Suppose we can complete 12 tasks in a month: Throughput = 12. Following Little’s Law this means that the average Lead Time for each task is 1 month. Now suppose we put a limit on the amount of work we do at the same time, let’s say we reduce our batch size to 6. It doesn’t mean we do less work in the end: we still have 12 tasks to finish, we only agree to not work on more than 6 tasks at the same time. What happens to the average Lead Time? It is cut in half!

“Simply reducing the the amount of Work In Progress (the number of tasks/acticivities/projects we work on at the same time) can have a huge effect on reducing lead time.”

I remember a tv show where a journalist was complaining to the police about the way they handled cases of stalking. One particular complaint was that a police officer might promise to call back and then don’t or much later than promised. The police spokesperson tried to explain that their capacity to work on these cases was limited. They worked very hard on them and tried to satisfy everybody. At least everybody should be able to file an official complaint. The police clearly was working hard, nobody had any doubts there. But Little’s Law could have provided some useful insights. Although highly controversial it might actually be better to reduce the number of cases the police takes on at the same time. The idea was: If we can handle twelve cases, and we are fully booked, and a 13th case come by, only that last case gets stalled. But this isn’t true as Little’s Law tells us. Each individual case gets delayed in trying to handle too many cases at the same time. This is a very widespread mistake.

“When you work on too many tasks at the same time, adding one more task will not just delay that last task. It will delay them all!”

Conclusion

Organizations tend to be blind for delays. One reason for this is the focus on processing time over lead time. We focus more on the actual time spend working on tasks, thereby loosing track of the time we spend waiting. This is mainly caused by the silo structure of our organizations and centralized decision-making. The other reason we miss delays is high utilization of people and batching of work. The effect of high utilization on human beings is enlarged by the high switching costs between jobs.

There are important lessons to be learned here if we are trying to transform to an adaptive organization. As a sustainable competitive advantage no longer exists, we need to constantly reinvent ourselves. Because most new business ideas will fail, we need to test many. This means we need short feedback cycles to validate new business models. And as we realize that business models have a limited shelf life we need to recoup investments and gain market share fast. It is all about speed, the speed of learning. We have seen that there is much more to be gained in reducing delays as opposed to optimizing processing time. So this is an important topic in the Adaptive Organization.

Now if you have a picture in mind of an army of Lean Six Sigma Black-belts marching in, you have the wrong picture. Our work and processes get more complex. The best way to reduce delays in such environments has more to do with distribution of authority, intrinsic motivation, shared responsibility, and clarity of purpose and vision, than a mathematical approach to reducing waste. These solutions are the topic of many other articles on this website.

Bibliography

List of notes and sources we reference from.

Notes

- Multitasking Can Make You Lose … Um … Focus https://www.nytimes.com/2008/10/25/business/yourmoney/25shortcuts.html

- Weinberg, G.M. Quality Software Management: Vol. 1 System Thinking. New York. Dorset House, 1992.

Follow this article

You can choose to follow this article in which case you will be notified when there there are updates of the article. You can also choose to follow all articles of this category. You will then be notified if a new article is added in this category.